- #Create cpp file with visual studio for mac mac os

- #Create cpp file with visual studio for mac install

#Create cpp file with visual studio for mac install

$ brew install cmake Installing OpenCV from Source with CMakeīefore I can build and install OpenCV I must clone its source repo from GitHub. CMake can be easily installed using brew as follows.

I use CMake in this tutorial to build and install OpenCV for C++ as well as run the demo project. $ /bin/bash -c "$(curl -fsSL )" Installing CMakeĬMake is a cross platform build tool popular among native C/C++ developers. To install Homebrew execute this command from your terminal.

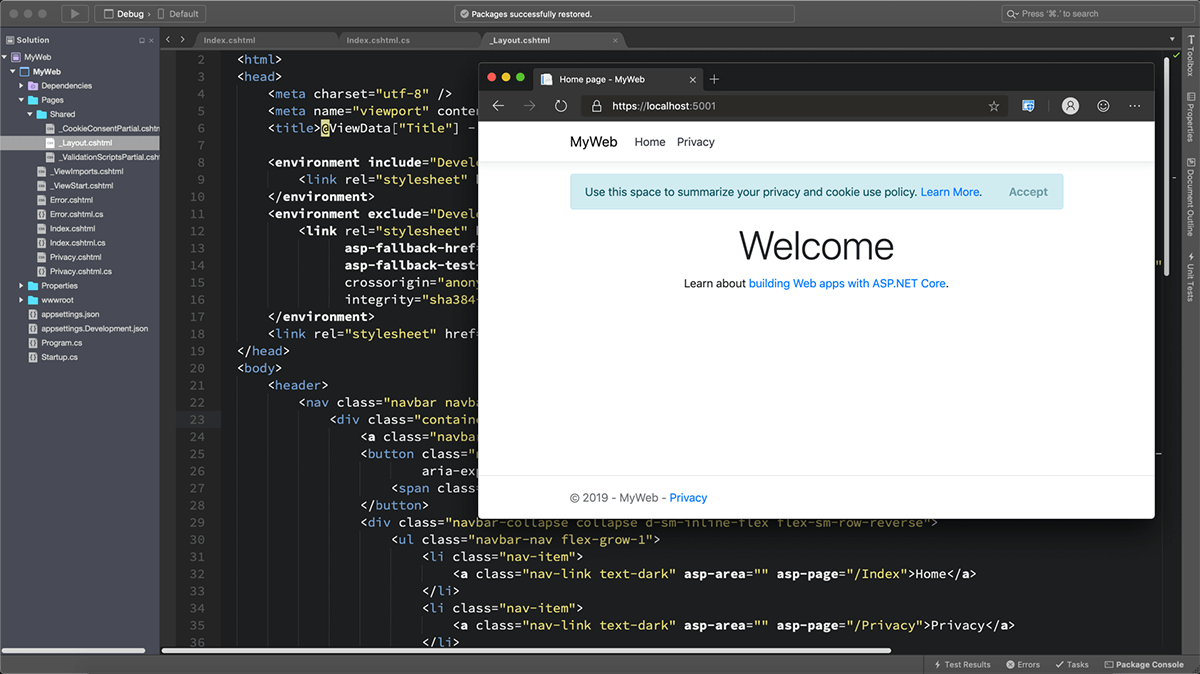

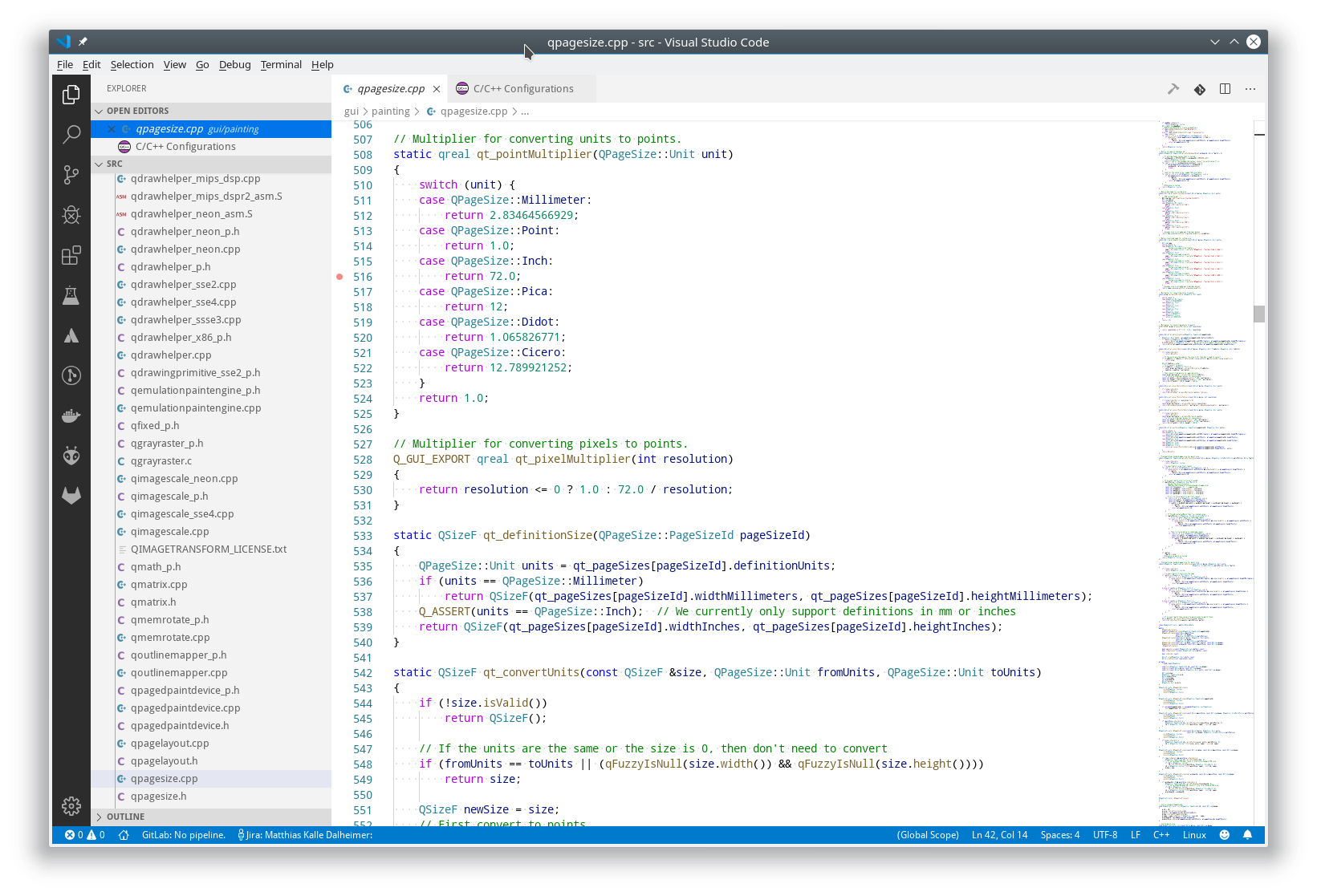

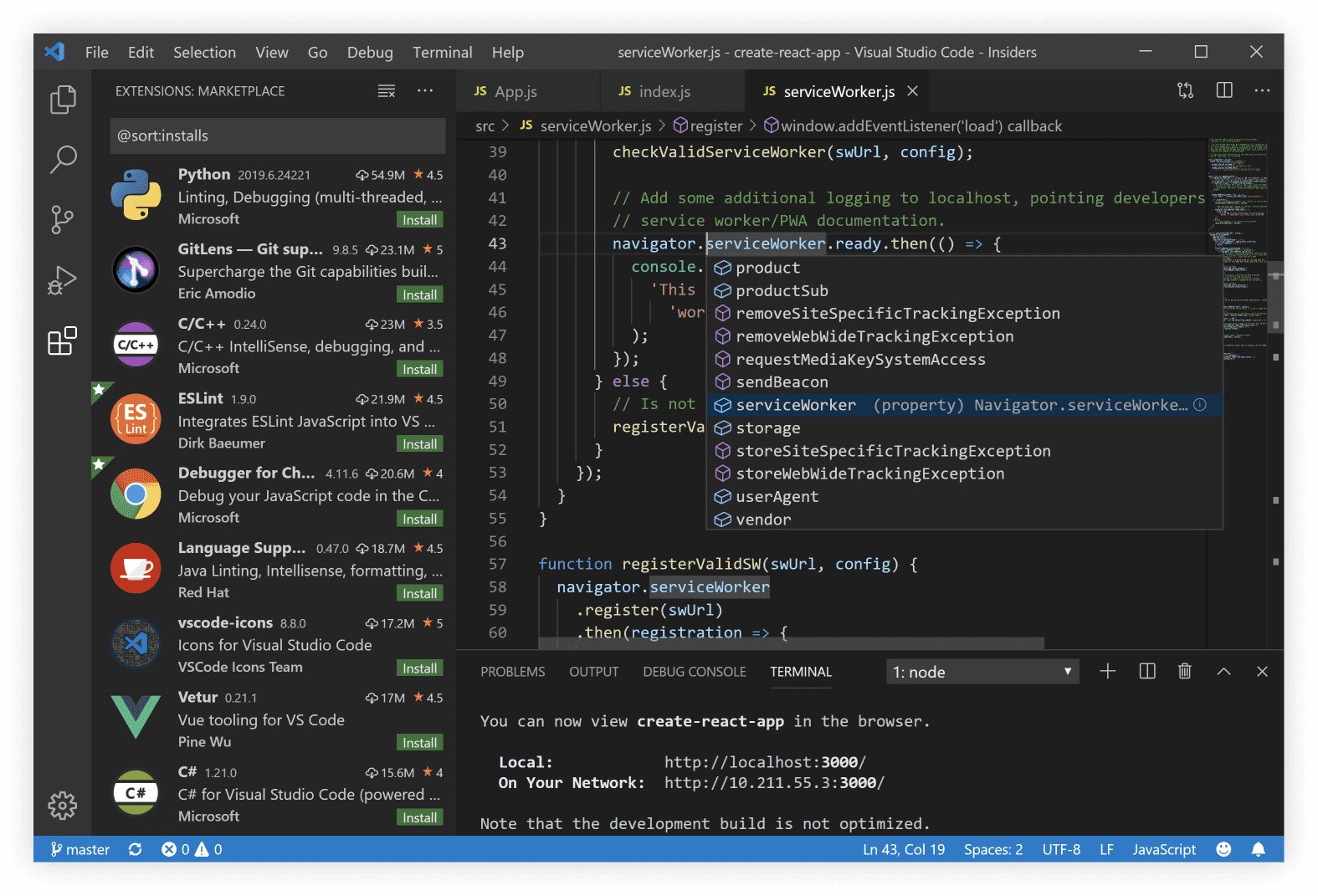

#Create cpp file with visual studio for mac mac os

Homebrew, aka brew, is a software package manager for the Mac OS platform that is heavily used among developers. Since installing XCode is covered quite well by Apple Developer Support and a very common task among technologists utilizing Mac OS platforms I will simple link to Apple's docs for that part. Refer to the Supported Configurations section on the Supported Devices page to choose the relevant configuration.įor input ( iterate over all input info): input_data -> setPrecision ( InferenceEngine::Precision::U8 ) įor output ( iterate over all output info): output_data -> setPrecision ( InferenceEngine::Precision::FP32 ) īy default, the input and output precision is set to Precision::FP32.In order to utilize the popular Mac OS clang compiler you should have XCode and the XCode tools installed. Set precision (number format): FP16, FP32, INT8, etc. Human Pose Estimation Sample (gst-launch command line)įace Detection And Classification Python SampleĪsynchronous Inference Request base classes Metadata Publishing Sample (gst-launch command line)Īction Recognition Sample (gst-launch command line)

Vehicle and Pedestrian Tracking Sample (gst-launch command line) Troubleshooting for DL Workbench in the Intel® DevCloud for the EdgeĪudio Detection Sample (gst-launch command line)įace Detection And Classification Sample (gst-launch command line) Import Frozen TensorFlow* SSD MobileNet v1 COCO Tutorialīuild Your Application with Deployment Packageĭeploy and Integrate Performance Criteria into Application OpenVINO™ Deep Learning Workbench User Guide Run the DL Workbench in the Intel® DevCloud for the Edge Install DL Workbench from the Intel® Distribution for OpenVINO™ Toolkit Package OpenVINO™ Deep Learning Workbench Overview Graphical Web Interface for OpenVINO™ toolkit Post-training Optimization Tool Frequently Asked Questionsĭeep Learning accuracy validation framework Use Post-Training Optimization Tool Command-Line Interface Post-Training Optimization Best Practices Post-Training Optimization Tool Installation Guide INT8 vs FP32 Comparison on Select Networks and Platforms Performance Information Frequently Asked Questionsĭownload Performance Data Spreadsheet in MS Excel* Format Intel® Distribution of OpenVINO™ toolkit Benchmark Results Migration from Inference Engine Plugin API to Core APIĭeep Learning Network Intermediate Representation and Operation Sets in OpenVINO™ Introduction to Inference Engine Device Query API

Known Issues and Limitations in the Model Optimizerĭeployment Optimization Guide Additional Configurations

Model Optimizer Frequently Asked Questions Extending Model Optimizer with New PrimitivesĮxtending Model Optimizer with Caffe* Python Layers Intermediate Representation Notation Reference Catalog Sub-Graph Replacement in the Model Optimizer Intermediate Representation Suitable for INT8 Inference Convert ONNX* DLRM to the Intermediate RepresentationĬonvert PyTorch* F3Net to the Intermediate RepresentationĬonvert PyTorch* QuartzNet to the Intermediate RepresentationĬonvert PyTorch* RNN-T Model to the Intermediate Representation (IR)Ĭonvert PyTorch* YOLACT to the Intermediate Representation Converting a Model to Intermediate Representation (IR)Ĭonverting YOLO* Models to the Intermediate Representation (IR)Ĭonvert TensorFlow* FaceNet Models to Intermediate RepresentationĬonvert Neural Collaborative Filtering Model from TensorFlow* to the Intermediate RepresentationĬonvert TensorFlow* DeepSpeech Model to the Intermediate RepresentationĬonverting TensorFlow* Language Model on One Billion Word Benchmark to the Intermediate RepresentationĬonverting TensorFlow* Object Detection API ModelsĬonverting TensorFlow*-Slim Image Classification Model Library ModelsĬonvert CRNN* Models to the Intermediate Representation (IR)Ĭonvert GNMT* Model to the Intermediate Representation (IR)Ĭonvert TensorFlow* BERT Model to the Intermediate RepresentationĬonvert TensorFlow* XLNet Model to the Intermediate RepresentationĬonverting TensorFlow* Wide and Deep Family Models to the Intermediate RepresentationĬonverting EfficientDet Models from TensorFlowĬonverting a Style Transfer Model from MXNet*Ĭonvert Kaldi* ASpIRE Chain Time Delay Neural Network (TDNN) Model to the Intermediate RepresentationĬonvert ONNX* Faster R-CNN Model to the Intermediate RepresentationĬonvert ONNX* Mask R-CNN Model to the Intermediate RepresentationĬonvert ONNX* GPT-2 Model to the Intermediate Representation

0 kommentar(er)

0 kommentar(er)